Good Abstractions

Something today had me thinking about CS/ECE 252, one of the first college classes I took at UW Madison my senior year of high school. Thinking about CS/ECE 252 from 2014 is a weird thing to be thinking about on a Saturday in 2025, but here we are and it was a lesson from that class I had on my brain, not just about computer science, but about engineering as a whole.

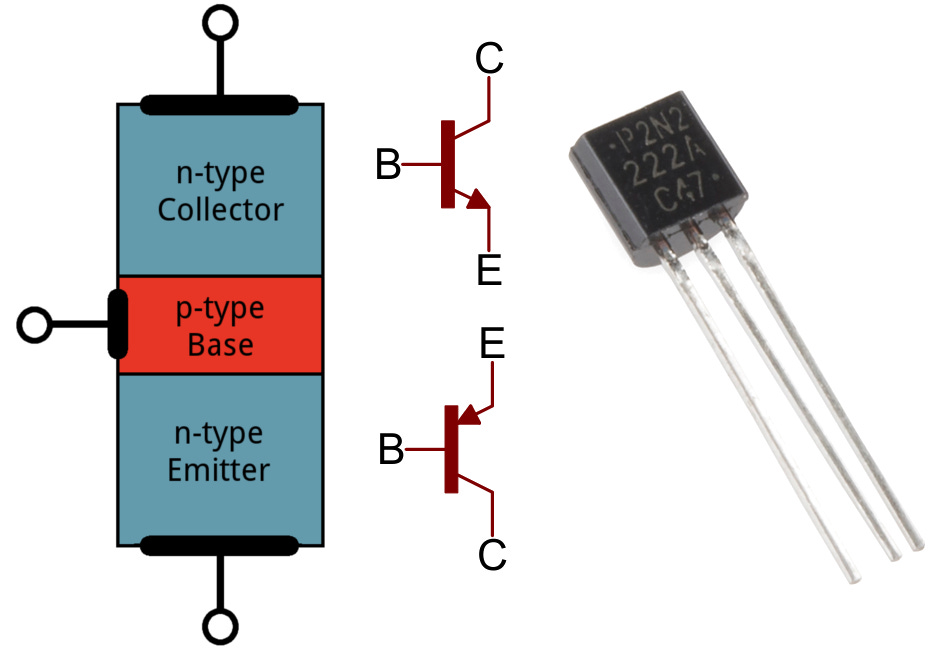

The foundation of the class was what makes computers tick internally, moving up the stack from the direct current through the transistor to the basic form of binary logic where the electricity moves all the way up to programming in binary, assembly, and eventually the foundations of what becomes compiled and interpreted languages. It’s where computer engineering (processors and hardware) meets computer science (programming and code).

I learned a lot about computers in that class, but I also learned about something potentially more important: abstractions.

Human beings have been abstracting for millennia — it’s the foundation of our engineered existence. We create entire sciences like materials to act as foundational components of other sciences like mechanical or aerospace engineering. We write contracts to each other that “when I sell you this steel it has x degrees C melting point and abc stress tolerance.“ Then the mechanical engineers working with these systems never think about the contract again, they just build on top of it.

When you’re programming in assembly, you’re not thinking about the bits flipping in the processor, how data is being moved between L1 cache and RAM, or the conversion of AC current to direct current from the power supply onto the motherboard. You’re thinking about the logic of the basic units in assembly. This is the same as when you’re programming in C++ you’re not thinking about how the compiled binary underneath gets loaded through the OS. This is also same idea as writing a simple python script and not understanding EVERYTHING that happens underneath to get that to run on the hardware — it just happens, how you expect it, every time. These are good abstractions.

This is a long winded way to talk about the abstractions of LLMs, which I think we feel is new, but has possibly existed for a long time among us socially without putting a label on it. Right now is the first time machines have done it — which is the abstracted ability to create and share ideas.

Half baked, but there are also abstractions where we ourselves are one of the building blocks - the ability for ideas themselves to evolve and morph between human, and now machine, minds which possibly emerges from some foundational AI technologies like the transformer and the neural net. Sometimes this comes in the form of concepts and other times emotions, reactions, and feelings.

It has me wondering if intelligence (and related ideas) is some naturally emergent property of the universe, and if we’re still at the very beginning of exploring the abstractions it creates, or potentially what else exists there.